What Donkey Kong can tell us about how to study the brain

Brain scientists Eric Jonas and Konrad Kording had grown skeptical. They weren’t convinced that the sophisticated, big data experiments of neuroscience were actually accomplishing anything. So they devised a devilish experiment.

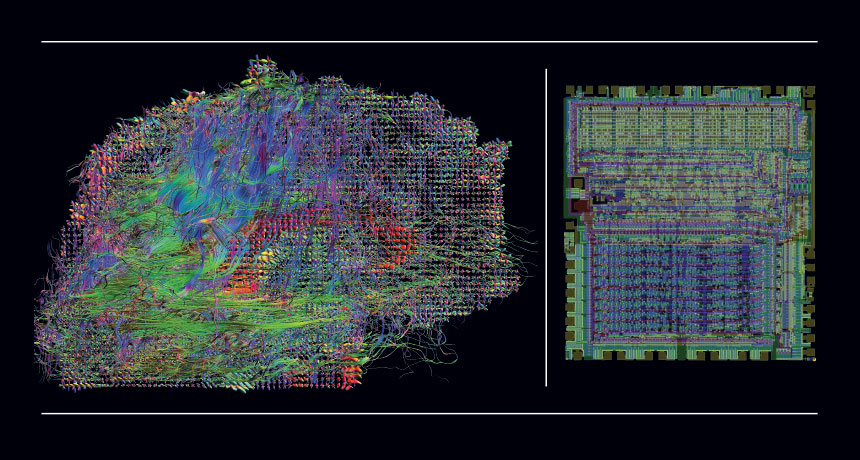

Instead of studying the brain of a person, or a mouse, or even a lowly worm, the two used advanced neuroscience methods to scrutinize the inner workings of another information processor — a computer chip. The unorthodox experimental subject, the MOS 6502, is the same chip that dazzled early tech junkies and kids alike in the 1980s by powering Donkey Kong, Space Invaders and Pitfall, as well as the Apple I and II computers.

Of course, these experiments were rigged. The scientists already knew everything about how the 6502 works.

“The beauty of the microprocessor is that unlike anything in biology, we understand it on every level,” says Jonas, of the University of California, Berkeley.

Using a simulation of MOS 6502, Jonas and Kording, of Northwestern University in Chicago, studied the behavior of electricity-moving transistors, along with aspects of the chip’s connections and its output, to reveal how it handles information. Since they already knew what the outcomes should be, they were actually testing the methods.

By the end of their experiments, Jonas and Kording had discovered almost nothing.

Their results — or lack thereof — hit a nerve among neuroscientists. When Jonas presented the work last year at a Kavli Foundation workshop held at MIT, the response from the crowd was split. “A bunch of people said, ‘That’s awesome. I had that idea 10 years ago and never got around to doing it,’ ” Jonas says. “And a bunch of people were like, ‘That’s bullshit. You’re taking the analogy way too far. You’re attacking a straw man.’ ”

On May 26, Jonas and Kording shared their results with a wider audience by posting a manuscript on the website bioRxiv.org. Bottom line of their report: Some of the best tools used by neuro-scientists turned up plenty of data but failed to reveal anything meaningful about a relatively simple machine. The implications are profound — and discouraging. Current neuro-science methods might not be up for the job when it comes to truly understanding the brain.

The paper “does a great job of articulating something that most thoughtful people believe but haven’t said out loud,” says neuroscientist Anthony Zador of Cold Spring Harbor Laboratory in New York. “Their point is that it’s not clear that the current methods would ever allow us to understand how the brain computes in [a] fundamental way,” he says. “And I don’t necessarily disagree.”

Differences and similarities

Critics, however, contend that the analogy of the brain as a computer is flawed. Terrence Sejnowski of the Salk Institute for Biological Studies in La Jolla, Calif., for instance, calls the comparison “provocative, but misleading.” The brain and the microprocessor are distinct in a huge number of ways. The brain can behave differently in different situations, a variability that adds an element of randomness to its machinations; computers aim to serve up the same response to the same situation every time. And compared with a microprocessor, the brain has an incredible amount of redundancy, with multiple circuits able to step in and compensate when others malfunction.

In microprocessors, the software is distinct from the hardware — any number of programs can run on the same machine. “This is not the case in the brain, where the software is the hardware,” Sejnowski says. And this hardware changes from minute to minute. Unlike the microprocessor’s connections, brain circuits morph every time you learn something new. Synapses grow and connect nerve cells, storing new knowledge.

Brains and microprocessors have very different origins, Sejnowski points out. The human brain has been sculpted over millions of years of evolution to be incredibly specialized, able to spot an angry face at a glance, for instance, or remember a childhood song for years. The 6502, which debuted in 1975, was designed by a small team of humans, who engineered the chip to their exact specifications. The methods for understanding one shouldn’t be expected to work for the other, Sejnowski says.

Yet there are some undeniable similarities. Brains and microprocessors are both built from many small units: 86 billion neurons and 3,510 transistors, respectively. These units can be organized into specialized modules that allow both “organs” to flexibly move information around and hold memories. Those shared traits make the 6502 a legitimate and informative model organism, Jonas and Kording argue.

In one experiment, they tested what would happen if they tried to break the 6502 bit by bit. Using a simulation to run their experiments, the researchers systematically knocked out every single transistor one at a time. They wanted to know which transistors were mission-critical to three important “behaviors”: Donkey Kong, Space Invaders and Pitfall. The effort was akin to what neuroscientists call “lesion studies,” which probe how the brain behaves when a certain area is damaged.

The experiment netted 1,565 transistors that could be eliminated without any consequences to the games. But other transistors proved essential. Losing any one of 1,560 transistors made it impossible for the microprocessor to load any of the games.

Big gap

Those results are hard to parse into something meaningful. This type of experiment, just as those in human and animal brains, are informative in some ways. But they don’t constitute understanding, Jonas argues. The gulf between knowing that a particular broken transistor can stymie a game and actually understanding how that transistor helps compute is “incredibly vast,” he says.

The transistor “lesion” experiment “gets at the core problem that we are struggling with in neuro-science,” Zador says. “Although we can attribute different brain functions to different brain areas, we don’t actually understand how the brain computes.”

Other experiments reported in the study turned up red herrings — results that looked similar to potentially useful brain data, but were ultimately meaningless. Jonas and Kording looked at the average activity of groups of nearby transistors to assess patterns about how the microprocessor works. Neuro-scientists do something similar when they analyze electrical patterns of groups of neurons. In this task, the microprocessor delivered some good-looking data. Oscillations of activity rippled over the microprocessor in patterns that seemed similar to those of the brain. Unfortunately, those signals are irrelevant to how the computer chip actually operates.

Data from other experiments revealed a few finds, including that the microprocessor contains a clock signal and that it switches between reading and writing memory. Yet these are not key insights into how the chip actually handles information, Jonas and Kording write in their paper.

It’s not that analogous experiments on the brain are useless, Jonas says. But he hopes that these examples reveal how big of a challenge it will be to move from experimental results to a true understanding. “We really need to be honest about what we’re going to pull out here.”

Jonas says the results should caution against collecting big datasets in the absence of theories that can help guide experiments and that can be verified or refuted. For the microprocessor, the researchers had a lot of data, yet still couldn’t separate the informative wheat from the distracting chaff. The results “suggest that we need to try and push a little bit more toward testable theories,” he says.

That’s not to say that big datasets are useless, he is quick to point out. Zador agrees. Some giant collections of neural information will probably turn out to be wastes of time. But “the right dataset will be useful,” he says. And the right bit of data might hold the key that propels neuroscientists forward.

Despite the pessimistic overtones in the paper, Christof Koch of the Allen Institute for Brain Science in Seattle is a fan. “You got to love it,” Koch says. At its heart, the experiment on the 6502 “sends a good message of humility,” he adds. “It will take a lot of hard work by a lot of very clever people for many years to understand the brain.” But he says that tenacity, especially in the face of such a formidable challenge, will eventually lead to clarity.

Zador recently opened a fortune cookie that read, “If the brain were so simple that we could understand it, we would be so simple that we couldn’t.” That quote, from IBM researcher Emerson Pugh, throws down the challenge, Zador says. “The alternative is that we will never understand it,” he says. “I just can’t believe that.”